Multi-View Vehicle Re-Identification using Temporal Attention Model and Metadata Re-ranking.

Published in 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, 2019

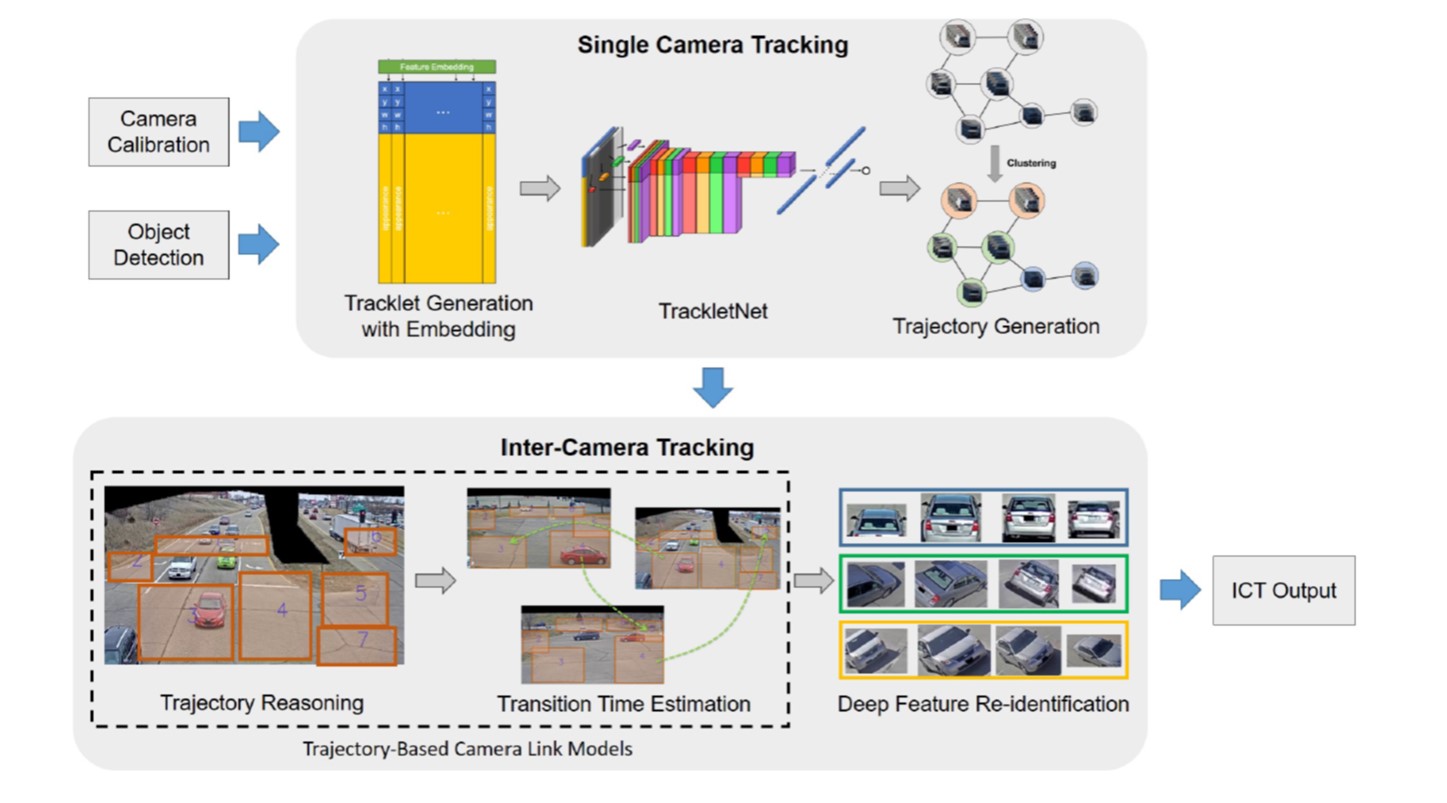

Object re-identification (ReID) is an arduous task which requires matching an object across different non-overlapping camera views. Recently, many researchers are working on person ReID by taking advantages of appearance, human pose, temporal constraints, etc. However, vehicle ReID is even more challenging because vehicles have fewer discriminant features than human due to viewpoint orientation, changes in lighting condition and inter-class similarity. In this paper, we propose a viewpoint-aware temporal attention model for vehicle ReID utilizing deep learning features extracted from consecutive frames with vehicle orientation and metadata attributes (i.e., type, brand, color) being taken into consideration. In addition, re-ranking with soft decision boundary is applied as post-processing for result refinement. The proposed method is evaluated on the CVPR AI City Challenge 2019 dataset, achieving mAP of 79.17% with the second place ranking in the competition.

Recommended citation: Huang, T. W., Cai, J., Yang, H., Hsu, H. M., & Hwang, J. N. (2019, June). Multi-View Vehicle Re-Identification using Temporal Attention Model and Metadata Re-ranking. In CVPR Workshops (Vol. 2). https://openaccess.thecvf.com/content_CVPRW_2019/papers/AI%20City/Huang_Multi-View_Vehicle_Re-Identification_using_Temporal_Attention_Model_and_Metadata_Re-ranking_CVPRW_2019_paper.pdf